Stage 8: Audio

Because we have already created all the functions needed to track collisions we can now easily play a SFX whenever there is an enemy destroyed or there is a collision with the player.

Because we have already created all the functions needed to track collisions we can now easily play a SFX whenever there is an enemy destroyed or there is a collision with the player.

The three things we need to do for audio is:

- Create an Audio Context (audioCtx)

- Create a gameAudio() function that will load and control the playing of our audio

- Create a global variable that will store our sounds and instantiate it in our onload() function.

- Place the .playAudio() function into our collision code so that every time there is a collision we play the sound.

- Increase our numResources variable to 20 since we will load three new resources.

The following code for our game audio is modelled from code from https://developer.mozilla.org/en-US/docs/Web/API/BaseAudioContext/decodeAudioData for more information on what each line does see this website. The basics are that we:

- Create an Audio Context. An Audio Context is responsible for the loading and executing of audio functions.

- Set the audio source to the contents of a data file that we load. Our data file will be whatever we pass to the function under the filename parameter.

- Once the file is loaded we update our load progress because we don’t want our game to start until all audio has been loaded.

Finally if the save parameter is set to false then we play the audio straight away, otherwise we wait until the .playAudio() function is run.

Add this code to the end of our JavaScript file:

function gameAudio(filename, loop, save) {

this.playMultipleTimes = save;

this.audioSourceBuffer;

this.audioSource = audioCtx.createBufferSource();

this.audioSource.loop = loop;

this.request = new XMLHttpRequest();

this.request.open('GET', 'sound/' + filename, true);

this.request.responseType = 'arraybuffer';

var self = this;

this.request.onload = function () {

self.audioData = self.request.response;

audioCtx.decodeAudioData(self.audioData, function (buffer) {

self.audioSourceBuffer = buffer;

if (!self.playMultipleTimes) {

self.audioSource.buffer = buffer;

self.audioSource.connect(audioCtx.destination);

self.audioSource.start(0);

}

}, function (e) {

console.log("Error with decoding audio data" + e.err);

});

loadProgress = loadProgress + 1;

loadingUpdate();

};

this.request.send();

this.playAudio = function () {

var source = audioCtx.createBufferSource();

source.buffer = this.audioSourceBuffer;

source.connect(audioCtx.destination);

source.start(0);

};

}

With this function written we can setup our global variables, instantiate our variables and play our explosions when there is a collision.

Add the following variables to our global variables at the start of our code:

...

var bulletSpeed = -6;

var bulletArray = [];

var maxBullets = 10;

var score = 0;

var health = 3;

var explosionSound;

var bulletExplosionSound;

var audioCtx =new (window.AudioContext || window.webkitAudioContext)();

window.onload = function () {

Inside window.onload add these three lines at the end of the function, they instantiate our audio objects for each audio file. Our three audio files are; the background music and two explosions:

window.onload = function () {

gameCanvas = document.getElementById("gameCanvas");

gameCanvas.width = canvasWidth;

gameCanvas.height = canvasHeight;

ctx = gameCanvas.getContext("2d");

...

gameCanvas.addEventListener('click', function (event) {

...

}, false);

soundtrack = new gameAudio("01_Main Theme.mp3", true, false);

explosionSound = new gameAudio("snd_explosion2.wav", false, true);

bulletExplosionSound=new gameAudio("snd_explosion1.wav",false,true);

};

Testing your game now should result in the background music playing. For the explosions to play on collision we need to add our .playAudio() function call to our two collision detection functions.

Add the one playAudio() call into checkPlayerCollision():

function checkPlayerCollision(playerObject, enemyObject) {

if ((playerObject.xcenter > enemyObject.x) && (playerObject.xcenter < enemyObject.x + enemyObject.width) && (playerObject.ycenter > enemyObject.y) && (playerObject.ycenter < enemyObject.y + enemyObject.height)) {

explosionSound.playAudio();

enemyObject.resetLocation();

health = health - 1;

playerObject.flashPlayer();

if (health == 0) {

endGame();

}

}

}

Add our playAudio() call into checkEnemyCollision():

function checkEnemyCollision(object1, object2) {

if ((object2.x + object2.width / 2 > object1.x) && (object2.x + object2.width / 2 < object1.x + object1.width) && (object2.y + object2.height / 2 > object1.y) && (object2.y + object2.height / 2 < object1.y + object1.height)) {

object1.y = -200;

object1.x = 30 + Math.random() * (canvasWidth - object1.width - 30);

object2.active = false;

player.score = player.score + 1;

bulletExplosionSound.playAudio();

if ((player.score % 20 == 0)) {

enemy1.yspeed = enemy1.yspeed + 1;

enemy2.yspeed = enemy2.yspeed + 1;

enemy3.yspeed = enemy3.yspeed + 1;

}

}

}

Play your game now and the sound effects should be working, including the background music. Any audio file that is accepted by JavaScript can be used, depending on the browser this can include mp3, WebM, wav, MP4, ogg.

Data compression reduces the size of a file, saving on storage requirements and transmission times.

You will notice that our two files loaded have the .wav and .mp3 file extensions.

While the WAV file can contain compressed data it commonly stores data in the same format as CD’s (LPCM format), which is uncompressed.

There are two types of compression techniques; lossless and lossy. The simplest form of lossless compression looks for repeated patterns that can be indexed and replace with smaller symbols or tokens. Files compressed with lossless compression techniques produce an exact replica of the file when decompressed.

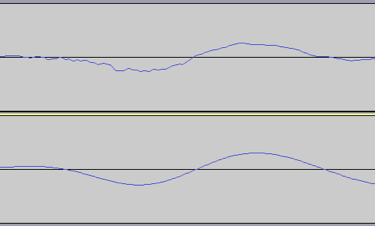

MP3 files use lossy compression techniques. With lossy techniques, redundant data or less important information is thrown away. For example, in MP3s an audio compress technique called “perceptual audio coding and psychoacoustic compression” is used to discard sound frequencies that humans can’t hear. The image on the right shows two audio waveforms, the first is a CD quality version, the second is a heavily compressed MP3 file, notice the smoother waveforms of the MP3.. This is because the compression algorithm has tried to remove some of the audio precision and approximated the sound wave.

MP3 files use lossy compression techniques. With lossy techniques, redundant data or less important information is thrown away. For example, in MP3s an audio compress technique called “perceptual audio coding and psychoacoustic compression” is used to discard sound frequencies that humans can’t hear. The image on the right shows two audio waveforms, the first is a CD quality version, the second is a heavily compressed MP3 file, notice the smoother waveforms of the MP3.. This is because the compression algorithm has tried to remove some of the audio precision and approximated the sound wave.

The higher the compression rate the more information that is “thrown away”. While modern lossy compression techniques sound or look (eg JPEG) good they still only approximate the original sound, picture or video file when decompressed.

When released, MP3 files allowed music to be compressed to sizes that allowed for songs to be easily transmitted across the Internet, this resulted in an increase in music piracy (the distribution of copyrighted songs illegally).

Move on to stage 8 –>

Jump to: [Vertical Shooter Post: 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 ]